St. Cloud State University

theRepository at St. Cloud State

/'($).$)",*% .-$))!*,(.$*)--/,) +,.( ).*!)!*,(.$*)3-. (-

Designing & Implementing a Java Web Application

to Interact with Data Stored in a Distributed File

System

Punith Reddy Etikala

St. Cloud State University+ .$&'-.'*/-.. /

*''*1.#$-)$.$*)'1*,&-. #6+-, +*-$.*,3-.'*/-.. /(-$ .-

5$-.,, + ,$-,*/"#..*3*/!*,!, )*+ ) --3.# +,.( ).*!)!*,(.$*)3-. (-..# +*-$.*,3..'*/.. .#- )

+. !*,$)'/-$*)$)/'($).$)",*% .-$))!*,(.$*)--/,) 3)/.#*,$4 ($)$-.,.*,*!.# +*-$.*,3..'*/.. *,(*,

$)!*,(.$*)+' - *).. ,-1 2 '/(-.'*/-.. /

*(( ) $..$*)

.$&'/)$.# 3 -$")$)"(+' ( ).$)"0 ++'$.$*).*). ,.1$.#..*, $)$-.,$/. $' 3-. (

Culminating Projects in Information Assurance

#6+-, +*-$.*,3-.'*/-.. /(-$ .-

Designing & Implementing a Java Web Application to Interact with Data Stored in a

Distributed File System

by

Punith Reddy Etikala

A Starred Paper

Submitted to the Graduate Faculty of

St. Cloud State University

in Partial Fulfillment of the Requirements

for the Degree of

Master of Science

in Information Assurance

December, 2016

Starred Paper Committee:

Dr. Dennis Guster, Chairperson

Dr. Lynn Collen

Dr. Keith Ewing

2

Abstract

Every day there is an exponential increase of information and this data must be stored

and analyzed. Traditional data warehousing solutions are expensive. Apache Hadoop is a popular

open source data store which implements map-reduce concepts to create a distributed database

architecture. In this paper, a performance analysis project was devised that compares Apache

Hive, which is built on top of Apache Hadoop, with a traditional database such as MySQL. Hive

supports HiveQueryLanguage, a SQL like directive language which implements MapReduce

jobs. These jobs can then be executed using Hadoop. Hive also has a system catalog – Metastore

which is used to index data components. The Hadoop framework is developed to include a

duplication detection system which helps managing multiple copies of the same data at the file

level. The Java Server Pages and Java Servlet framework were used to build a Java web

application to provide a web interface for the clients to access and analyze large data sets present

in Apache Hive or MySQL databases.

3

Acknowledgement

This research paper about designing and implementing Java web applications to interact

with data stored in a distributed file system was undertaken using resources provided by the

Business Computing Research Laboratory of St. Cloud State University. Data used for the

analyses came from the St. Cloud State University library.

4

Table of Contents

Page

List of Tables ........................................................................................................................ 7

List of Figures ........................................................................................................................ 8

Chapter 1: Introduction .......................................................................................................... 11

Introduction ................................................................................................................ 11

Problem Statement ..................................................................................................... 14

Definition of Terms.................................................................................................... 15

Chapter 2: Literature Review and Background ..................................................................... 16

Challenges of working with Big Data ........................................................................ 16

Need for Distributed File System .............................................................................. 17

Architectures to support Big Data .............................................................................. 18

Performance Issues with Big Data ............................................................................. 18

Advantages of Hadoop and Map Reduce ................................................................... 22

Challenges of Effectively Integrating a Web with Hadoop and Map Reduce ........... 23

Chapter 3: Methodology ........................................................................................................ 26

Design of the Study .................................................................................................... 26

Web Application Technical Architecture .................................................................. 27

Data Collection .......................................................................................................... 28

Tools and Techniques ................................................................................................ 34

Hardware Environment .............................................................................................. 37

Software Environment ............................................................................................... 38

5

Page

Chapter 4: Implementation .................................................................................................... 39

Installing Java ............................................................................................................ 39

Installing SSH ............................................................................................................ 39

Installing and Configuring Apache Tomcat ............................................................... 40

Installing and Configuring MySQL Server 5.6.......................................................... 41

Disabling IPv6 ........................................................................................................... 42

Installing and Configuring Apache Sqoop ................................................................. 42

Installing and Configuring Apache Hive ................................................................... 44

Installing and Configuring Apache Hadoop .............................................................. 47

Enabling Secure Connection using SSH .................................................................... 52

Configuring Hostname ............................................................................................... 53

Loading Twitter Data from Text file to MySQL ....................................................... 54

Importing Data from MySQL to HDFS ..................................................................... 54

Creating Tables in Hive ............................................................................................. 55

Loading Data from HDFS to Hive Tables ................................................................. 61

Chapter 5: Analysis and Results ............................................................................................ 62

Access to Hadoop Cluster .......................................................................................... 62

Access to Apache Tomcat .......................................................................................... 70

Access to Java Web Application................................................................................ 72

UML Diagrams .......................................................................................................... 82

Summary .................................................................................................................... 85

6

Page

Chapter 6: Conclusion and Future Work ............................................................................... 88

Conclusion ................................................................................................................. 88

Future Work ............................................................................................................... 88

References……. ..................................................................................................................... 89

Appendix………… ................................................................................................................ 93

7

List of Tables

Table Page

1. Definition of Terms.................................................................................................... 15

2. Students table in MySQL ........................................................................................... 29

3. Majors table in MySQL ............................................................................................. 30

4. CirculationLog table in MySQL ................................................................................ 31

5. Virtual Machine Details ............................................................................................. 37

6. Students table in Hive ................................................................................................ 57

7. Majors table in Hive................................................................................................... 58

8. CirculationLog table in Hive ..................................................................................... 59

9. TwitterAnalysis table in MySQL and Hive ............................................................... 60

10. Comparison of Computation time of Hive vs MySQL .............................................. 87

8

List of Figures

Figure Page

1. Hadoop Architecture .................................................................................................. 26

2. Web Interface Technical Architecture ....................................................................... 27

3. Data Model in MySQL .............................................................................................. 32

4. Twitter Application Management .............................................................................. 32

5. Twitter Application .................................................................................................... 33

6. Twitter Application Key and Access Tokens Management ...................................... 33

7. Hadoop Cluster – All Applications ............................................................................ 62

8. Hadoop Cluster – Active Nodes of the cluster........................................................... 62

9. Hadoop Cluster – Lost Nodes of the cluster ............................................................. 63

10. Hadoop Cluster – Unhealthy Nodes of the cluster ..................................................... 63

11. Hadoop Cluster – Decommissioned Nodes of the cluster ......................................... 64

12. Hadoop Cluster – Rebooted Nodes of the cluster ...................................................... 64

13. Hadoop Cluster – All Active Applications ................................................................ 65

14. Hadoop Cluster – Application in detail ..................................................................... 65

15. Hadoop Cluster – Current run configurations ............................................................ 66

16. Hadoop Cluster – Logs .............................................................................................. 66

17. Namenode overview .................................................................................................. 67

18. Namenode information .............................................................................................. 67

19. Datanode information ................................................................................................ 68

20. Hadoop cluster logs from Namemode ...................................................................... 68

9

Figure Page

21. Available HDFS FileSystem data .............................................................................. 69

22. Apache Tomcat Homepage ........................................................................................ 70

23. Apache Tomcat Application Manager login .............................................................. 70

24. Apache Tomcat WAR file to deploy in Application Manager .................................. 71

25. Apache Tomcat Application Manager ....................................................................... 71

26. Web Application Login Page ..................................................................................... 72

27. Web Application Login Page error for empty submission ........................................ 72

28. Web Application Login Page error for authentication failure ................................... 73

29. Web Application Home Page as MySQL Query Processor ....................................... 73

30. Web Application error for invalid MySQL query ..................................................... 74

31. Web Application results for valid MySQL query ...................................................... 74

32. Web Application Hive Query Processor .................................................................... 75

33. Web Application error for invalid Hive query ........................................................... 75

34. Hive query processing in Hadoop ............................................................................. 76

35. Web Application results for valid Hive query ........................................................... 77

36. Web Application Time Comparison with MySQL and Hive .................................... 77

37. Web Application Time Comparison with MySQL and Hive in Line Chart .............. 78

38. Web Application Time Comparison with MySQL and Hive for given query ........... 78

39. Web Application showing list of tables in MySQL ................................................... 79

40. Web Application showing list of tables in Hive ........................................................ 79

41. Web Application describing Hive table ..................................................................... 80

10

Figure Page

42. Web Application describing MySQL table................................................................ 80

43. Web Application describing cookie usage ................................................................. 81

44. User Login Sequence diagram ................................................................................... 82

45. MySQL Query Processor Sequence diagram ............................................................ 83

46. Hive Query Processor Sequence diagram .................................................................. 84

47. Line Chart Sequence diagram .................................................................................... 85

48. Architecture for MySQL and Hive Performance Comparison .................................. 86

11

Chapter 1: Introduction

Introduction

The interface to any data is critical to being able to use and understand that data. The

interface design is particularly important when working in the new area of Big Data. The concept

of “Big Data” presents a number of challenges to Information System professionals and

especially Web designers. In fact, one of the leading software analytic companies has discretely

broken them into five categories as summarized below:

1. Finding and analyzing the data quickly

2. Understanding the data structure and getting it ready for visualization

3. Making sure the data is timely and accurate

4. Displaying meaningful results (for example, using cluster analysis rather than plot the

whole data set)

5. Dealing with outliers (how to ensure they get proper attention) (SAS, 2015)

All of the categories are important in obtaining success in using Big Data and Data

Analytics. However, this paper will focus primarily on the categories related to finding and

extracting data from a potential distributed file system and being able to visualize it in a timely

manner.

The work of Jacobs, 2009 puts this into perspective and specifically states that as your

data set size grows, the probability that your applications that use that data will become

untenable from a performance perspective increases as well. This is particularly true if a Web

interface is used to aid in data visualization. Further, Jacobs, 2009 explains that the decay in

performance is caused by several factors and each case needs to be carefully assessed and the

12

application involved tuned to ensure adequate performance. One important example he cites

deals with the capabilities of traditional relational databases. Specifically, he states that: it’s

easier to get the data in than out. Most databases are designed to foster efficient transaction

processing like inserting, updating, searching for, and retrieving small amounts of information in

a large database.

It appears that platforms have been created to deal with the mass and structure of Big

Data. Further, as one might expect they utilize distributed processing as well as software

optimization techniques. An excellent summary of this work is presented by Singh & Reddy,

2014. In this work they discuss both horizontal distributed file systems such as Hadoop (and its

successor Spark) and vertical systems that rely on high performance solutions which leverage

multiple cores. This paper will focus on a specific horizontal system, Hadoop, because the goal

herein is to assess performance characteristics of that system when compared to a traditional

MYSQL DBMS in cases in which they are accessed via a Web interface. The Hadoop file

system and its associated components create a complex, but efficient architecture that can be

used to support Big Data analysis. Further, a modular approach can be employed with the

Hadoop architecture because a Web interface can interact with Hive (the query module) and

efficient performance can be obtained by using the map reduce function that allows Hadoop to

function as a distributed file system that can run in parallel.

It is worthwhile to look at the suggested architecture for the Hadoop based data analytics

ecosystem and compare it with the traditional scientific computing ecosystem. The work of Reed

& Dongarra, 2015 on Exascale computing explains this quite well. This work delineates in detail

all the components in the Hadoop architecture, including the map-reduce optimization software.

13

Because of the interaction with the Web interface the explanation of Hive which is a MapReduce

wrapper developed by Facebook, Thusoo et al., 2009, is also useful. This wrapper is a good

match for the Web interface design because of its macro nature which makes the coding easier

because programmers don’t need to directly address the complexities of MapReduce code.

Ultimately, if analytics are required via the Web interface the Hadoop based data analytics

ecosystem could be considered a unified system because it includes innovative application level

components such as R, which is an open source statistical programming language, which is

widely used by individual researchers in the life sciences, physical sciences, and social sciences,

(Goth, 2015). Goth further states that having a unified system makes the discovery process faster

by "closing the loop" between exploration and operation, which reduces the potential for error

when compared to a different systems approach. Interestingly, there is a trend to make data

scientists responsible for both exploration and production. This paper addresses the production

issue by integrating a Web interface.

Big data is a field that is still growing. Some of the areas that are still emerging are

improving the storage solutions, access times and optimization software will be explored by data

scientists. Najafabadi et al., 2015, felt that relevant future work might involve research related to:

defining data sampling criteria, domain adaptation modeling, defining criteria for data sampling

and obtaining useful data abstractions, improving semantic indexing, semi-supervised learning,

and active learning. Certainly, active learning could benefit from the optimized use of Web

interfaces.

In sum, this paper will use a Hadoop based data analytics ecosystem to support the design,

implementation and optimization of a Big Data application. Further, to assess its potential

14

advantages and its performance this system will be compared to a traditional DBMS using the

same Web interface. Special attention will be paid to the additional overhead a Web interface

places on the system.

This additional overhead is often misunderstood and only evaluated from a single

dimension. To understand the full effect overhead one needs to look at the total response time

model which is quite complex and involves a number of components.

A good representative example of this model is offered by Fleming, 2004: User -Application-

Command _CPULocalComputer _NICLocalComputer _Network-Propagation _Switch

_Network-Propagation _Switch _Network-Propagation _NICFile-Server _CPUFile-Server

_SCSI-bus _DiskRead then traverse the path in reverse for the reply.

When evaluating this model in terms of a Web based interface to a distributed file system

the additional delay caused by the Web service application coupled with the added network load

can have a detrimental effect on performance. Evaluating the extent that this occurs is one of the

primary goals of this paper.

Problem Statement

Given that the literature review indicates that Big Data is here to stay and the analysis of

such data in a timely manner will continue to be problematic there is a need to conduct

performance related research. Further, the state of the current technology requires a fair amount

of sophistication on the part of an end-user to deal with the parallelization often invoked to

provide the desired speed up.

Therefore, this paper will use a Hadoop test-bed with live data to test the performance of a Web

interface devised using the Java, JSP framework when deployed using both a Hadoop and a

15

traditional MySQL database. The primary metric will be elapsed time from client to server which

will allow measurement of end-to-end delay and provide a user interface to execute queries on

databases and export results of their analysis based on user access level.

Definition of Terms

Table1: Definition of Terms

HDFS

Hadoop Distributed File System

YARN

Yet Another Resource Negotiator

GUID

Global Unique Identifier

DBMS

Database Management System

PK

Primary Key

FK

Foreign Key

Hadoop

Framework that allows for the distributed

processing and storage of very large data sets

Hive

Data warehouse

MapReduce

Distribute work around a cluster

DSA

Digital Signature Algorithm

JSP

Java Server Pages

16

Chapter 2: Literature Review and Background

Challenges of working with Big Data

Because of the large volume of data involved, there are many challenges when working

in the area of Big Data. The complexity which is described by Jagadish et al., 2014. Specifically,

they state that working in the area of Big Data is a multi-step process and it is important not to

ignore any of the steps. In the case of this paper, of course the important step would be to

evaluate the interaction of the Web interface with the underlying distributed file system. Jagadish

et al., 2014, identified the following required steps: acquisition, information extraction, data

cleansing, data integration, modeling/analysis, interpretation and reporting. Too often one or

more of the steps are ignored and too much focus is placed on the reporting phase and the

“visualization of the results” which often can result in erroneous reporting. Therefore, the Web

interface devised and tested herein, will need to be evaluated in terms of accuracy and reliability

as well.

Of course, many of the challenges stems from the complex computing environments required to

support Big Data. There is a real challenge finding analysts with the technical maturity level

needed to support the acquisition and data integration steps that are critical before data modeling

can even take place (Morabito, 2015). So therefore, an understanding of the Exascale computing

structure described earlier by Reed & Dongarra, 2015 is crucial in being successful in the early

steps of data analytics. In the case of this paper, the architecture needs to be expanded to

encompass a Web interface.

17

Need for Distributed File System

The increased volume of data that results from a Big Data concept drastically complicates

analytic endeavors. That is not to say that traditional methods of accessing and managing large

data sets still have validity and usefulness. With a Web interface there may be a need to support

millions of hit scenarios. A primary limitation of traditional methods is that they are often not

scalable and may involve additional data set types, particularly unformatted data (Hu, 2014).

Traditional data storage methods offer a starting point and can be imported into newer distributed

systems so that scalability and adequate access performance can be realized. Generally speaking,

the new system would rely on some type of distributed processing and would include concepts

such as: ETL (extract, transform and load), EDW (enterprise data warehouse) SMP (symmetric

multi-processing) and distributed file systems (such as Hadoop). Obviously, while distributed

systems bring more processing power to the table it is critical that there is software in place to

manage the multiple threads that will be generated. This is provided in the case of Hadoop by the

map-reduce function. Multi-threading is also critical within the Web interface as well, so that if

need be a million of hits scenario can be supported.

While not part of the operational research undertaken herein, there are other options to

address the extraction logic from distributed data stores. A very popular option is the concept of

NoSQL databases. Traditional relational model database imposes a strict schema, this is in

contrast to many of the concepts within Big Data which are based on data evolution and

necessitate scaling across clusters. Thus, NoSQL databases support schema-less records which

allow data models to evolve (Gorton & Klein, 2014). The four most prominent data models

within this context according to Gorton & Klein, 2014 are:

18

1. Document databases (often XML or JSON based in MongoDB)

2. Key-value databases (such as Riak and DynamoDB)

3. Column-oriented databases (such as HBase and Cassandra)

4. Graph databases (such as Neo4j and GraphBase)

Architectures to support Big Data

There is much support for the concept of a distributed file system offering an effective

platform to support Big Data. While there may be other viable options in terms of design or

functionality, but distributed file systems by far offer the most cost effective solution (Jarr,

2014). A prime example of this is Hadoop, which is designed to deploy a distributed file system

on cheap commodity machines (Reed & Dongarra, 2015).

It also is interesting to note that the architecture to capture the Big Data in the first place

is expanding as well. This environment is personified by the Internet of Things (IoT) concept. It

relies on interconnected physical objects which effectively creates a mesh of sensor devices

capable of producing a mass of stored information. These sensors based networks pervade our

environment (e.g., cars, buildings, and smartphones) and continuously collect data about our

lives (Cecchinel, Jimenez, Mosser & Riveill, 2014). Thus, the use of it will further propagate the

legacy of Big Data.

Performance Issues with Big Data

Performance issues in Big Data stem from more than just the large amounts of data

involved. However, Big Data is characterized by other dimensions as well. Jewell et al., 2014 has

actually identified four dimensions:

1. Volume (Big data applications must manage and process large amounts of data),

19

2. Velocity (Big data applications must process data that are arriving more rapidly),

3. Variety (Big data applications must process many kinds of data, both structured and

unstructured) and

4. Veracity (Big data applications must include a mechanism to assess the correctness of the

large amount data of rapidly).

These dimensions provide multiple parameters from which to tune a system from a

performance perspective. Therefore, the computing environment required will need to be adept

in dealing with real-time processing, complex data relationships, complex analytics, efficient

search capabilities as well as effective Web interfaces. Given the current options, a private cloud

could be configured to maximize both processing speed as well as IO movement. Such a cloud

would lean heavily on distributed processing, distributed file systems and multiple instance of

the Web service. Further, dynamic allocation of resources would need to be implemented as

well. This might involve multiple instance of the Web service replicated across multiple hosts

with load balancing invoked.

The work of Jacobs, 2009 is very useful in putting the concept of Big Data into

perspective. He states that people often expect to extract data in seconds that took months and

months to store. So one could interpret this to mean it is a lot easier to get data into a traditional

relational database then get it out. It can be treated as a mass storage device and “chunks” of the

total can be extracted for partial processing, but when the Big Data is analyzed in bulk, the

scalability is not there and performance takes a nose dive. This is further compounded when Web

interfaces are involved. He further states that anticipating what “chunks” are needed and

extracting them to a data warehouse can help, but optimizing systems to use their full processing

20

and IO capabilities is challenging. For example, with reasonable numbers of transactions random

processing can be advantageous. However, when using mechanical drives an analysis algorithm

that utilizes random access memory may actually run slower than the same data can be read in

sequence from a mechanical drive. Without a doubt, the concept of distributed file systems is a

step in the right direction, but they too have limitations such as network latency. This network

latency may further complicate the performance of the Web interface if the client connection is

sharing the same network with a distributed file system. Hence sound network design within a

private cloud is critical. It will then be necessary for future systems to encompass designs that

expand the boundaries of current day thinking. No doubt the analyses of huge datasets will

become routine. A ramification of this case is that analysts that will be successful in analyzing

those data sets will need to look beyond off-the-shelf techniques and implement techniques that

take advantage of the environmental architecture (such as cloud computing), optimize the

hardware resources and devise/implement algorithms designed specifically to deal with Big Data

in an optimized hardware environment. Of course, if a Web interface is the entry point of that

system, it will need to be optimized and properly secured too.

It has been established that the volume of processing within Big Data requires a well-

designed architecture if reasonable performance is to be obtained. As previously stated the work

of Reed & Dongarra, 2015, provides excellent insight into Exascale computing. A foundation for

this architecture is the concept of a distributed storage system which would allow the data to be

extracted from multiple devices simultaneously (Chang et al., 2008). Of course the Hadoop file

system follows this logic. One of the benefits of Hadoop is that it is basically a data-analytics

cluster that can be based on commodity Ethernet networking technology and numerous PC nodes

21

(even a generation or two old) containing local storage. This model goes a long way in providing

a cost effective solution for large scale data-analytics (Lucas et al., 2014). Hadoop could then be

viewed as the logic fabric to bind them together. This characteristic made it easy to create a test-

bed environment for this paper. In fact, the resources needed were quickly configured in the

author’s private cloud using virtualization software.

A key component in the Hadoop system implementation is the Map Reduce model (Dean

& Ghemawat, 2004). First of all, Map Reduce is designed to facilitate the parallel processing

function within Hadoop applications. It is designed to utilize multi-core as well as processors

distributed across multiple computing nodes. The foundation of the Map Reduce system is a

distributed file system. Its primary function is based on a simple concept: Large files are broken

into equal size blocks, which are then distributed across, in our case, a commodity cluster and

stored. In our case the storage was within a private cloud and it was critical to implement fault

tolerance so therefore each block was stored several times (at least three times) on different

computers nodes.

A challenge with undertaking a performance analysis of this type is dealing with new technology

and learning new things. The authors’ primary background in deal with large data sources was a

traditional relational data base structure. Fortunately, a couple of tools are available to assist in

extracting data from the Hadoop file system. First there is “PIG” which was devised by Yahoo!

to streamline the process of analyzing large data sets by reducing the time required to write

mapper and reducer programs. According to IBM 2015b, the pig analogy stems from actual pigs,

who eat almost anything, hence, the PIG programming language is designed to handle any kind

of data! While it boasts a powerful programming language it is basically new syntax and requires

22

time to master. Another option Hive, uses an SQL derivative called Hive Query Language

(HQL) so that the developer is not starting from scratch and has a much shorter learning curve.

While HQL does not have the full capabilities of SQL it is still pretty useful (IBM, 2015a). It

completes its primary purpose quite well which is to serve as a front end to simplify MAP

REDUCE jobs that are executed across a Hadoop Cluster.

Advantages of Hadoop and Map Reduce

While the cloud architecture makes available numerous dynamically allocated resources

there must be some type of strategy to be able to multi-thread applications in a cost effective

manner. Hadoop is able to provide that efficient and cost effective platform for distributed data

stores. A key component is the Map Reduce function (MR). This function provides the means to

connect the distributed data segments in a meaningful way and take advantage of parallel

processing to ensure optimum performance. Clearly, the primary goal is to use these components

to facilitate the analysis of Big Data in a timely fashion. For applications that might still run in a

relational world, MR can also be used with parallel DBMS. In cornerstone applications like ETL

systems it can be complementary to DBMSs, since databases are not designed to be efficient at

ETL tasks.

In a benchmark study using a popular open-source MR implementation and two parallel DBMSs,

Stonebraker et al., 2010 found that DBMSs are substantially faster than MR open source systems

once the data is loaded, but that loading the data takes considerably longer to load in the database

systems. Dean & Ghemawat, 2010 expanded on the interrelationship between MapReduce and

parallel databases and found MR provides many significant advantages over parallel databases.

First and most important, MR provides fine-grain fault tolerance for large jobs and accordingly

23

failure in the middle of a multi-hour execution does not require restarting the job from scratch.

This would be especially important for Web interfaces that typically do not have check pointing

built in. Second, MR is most useful for handling, data processing and data loading in a

heterogeneous system with numerous varied storage systems (which describes a private cloud).

Third, MR is an excellent framework to support the execution of more complex functions than

are not directly supported in SQL. In summary, MR can be an effective means of linking

complex data parts together no matter the architecture, but is especially effective when used in

conjunction with Hadoop (Reed & Dongarra, 2015).

Challenges of Effectively Integrating a Web with Hadoop and Map Reduce

As one would expect the distributed nature of Hadoop complicates devising an effective

Web interface. The motivation for the Web interface is to allow less technical people to be able

to get around submitting static pieces of code from the command line. Of course that code will

have to deal with components such as the mapper and a reducer.

While there is a rudimentary Web interface that allows the submission HiveQL

statements it lacks the depth needed for sophisticated analysis. This is included with Apache as

part of the Hive source tarball.

Devising a sophisticated Hadoop Web Interface requires a different approach. According

to Logical Thoughts on Technology, 2013 the first step is to create a generic job submitter, one

that can then be used in a service call in the Web application. This user interface (UI) would

present some nice, clean, easy to use interface, next the user would make same sequence of

selections, and then they would click a button to start their job. It therefore follows then that on

the back-end, the request would be passed to a service call where the parameter set would be

24

processed and turned into a Hadoop job, and thereby submitted to the cluster. Logical Thoughts

on Technology, 2013 summarizes the three processing components as follows:

1. Something to gather up the set of parameters for each job

2. Something to convert string class names into actual classes

3. Something to step through the parameters, then perform any formatting/processing, and

submit the job

Last, they suggest that the production Web application that will perform the suggested

function be written in Java so that class objects are easily obtainable in accordance with sound

OOPs programming.

While the advantages of using Java for production Web applications are well known, it is

appropriate to provide a brief explanation of the “Java Server Pages framework” which will be

used to devise the Web interface for this project. As stated earlier, the cloud computing

environment can be quite complex. One of the primary purposes of the JSP framework is to

create a transparent interface to the infrastructure so that the programmer can more easily focus

on the application.

This brings us to the last topic of the Literature Review which deals with providing

adequate performance. A typical industry based standard for acceptable performance is a client

response time of three seconds or less. This is challenging under the best of circumstances, but

even more elusive in the world of Big Data.

As state earlier in the work of Fleming, 2004 indicated that it was just not how quickly

data could be read from the data source, but rather the result of end-to-end delay that an end-user

is concerned about. Guster, O’Brien & Lebentritt, 2013 address this as follows: “Given that the

25

network delay on the Internet in the US might often take .5 seconds in each direction it is

important to optimize each of the parameters. Further, one needs to realize that this whole

algorithm is based on queuing theory, which means that there is an interaction among all the

parameters. In other words, a delay of .0005 instead of .0001 at the first parameter won’t simply

result in .0004 seconds of additional response time. Rather, it will propagate through the entire

algorithm and the delay will get a little longer with added wait time at each successive

parameter. To put this in perspective, if one assumes a geometric progression through all 12

parameters in the algorithm above (Fleming, 2004) the result in total added delay would be close

to 1 second (.8192)”.

While Guster, O’Brien & Lebentritt, 2013 did realize acceptable performance in regard to

providing a Web interface delay of three seconds or less they were working in a less stringent

environment. They were using Casandra rather than Hadoop (which integrates the MR) function,

the volume of data was much less and they were not working in a true cloud computing

environment. It will be interesting to compare performance metrics between the systems and

with a traditional database.

26

Chapter 3: Methodology

Design of the Study

The following diagram explains the Hadoop architecture used for this project. It is

centered on the HDFS file system which is used to store large data files. To achieve the desired

parallelism MapReduce and the YARN framework is used to process HDFS data and provide

resource management. Apache Hive is built on top of Hadoop to provide a data summarization

and analysis on HDFS data. Apache Sqoop is used to transfer data between relational databases

and the HDFS file system. The Java Web application is originated by using JSP and Servlet

framework and allows reports to be displayed and provides the Web application needed to

compare performance times between MySQL and Hive. Similar architecture referred by Afreen,

2016 to work on design and performance characteristics.

Figure 1: Hadoop Architecture

HDFS

(Redundant, reliable storage)

YARN

(Cluster resource management)

MapReduce

(Data processing)

Hive

(HiveQL query)

Sqoop

(Data exchange)

Analytics

(Java Web application)

RDBMS

(MySQL)

27

Web Application Technical Architecture

The user interface is created by using Java Server Pages. When a user submits or calls

some function like executing queries on a MySQL or Hive database a comparison of execution

time between the MySQL and Hive queries is recorded. These function calls might include:

Displaying a table structure, Generating charts etc. The interface then calls Action Classes,

Servlets generally, which call Service functions and then the Data Access Object layer to connect

to the database to pass the results to Java Server Pages. Generally, the results are displayed in

table structure as well as Line charts and provide a basic synopsis of performance regarding

query execution on MySQL and Hive databases.

Figure 2: Web Interface Technical Architecture

Web Interface (JSP)

Action Classes

(Servlets)

Façade Layer

(Service)

DAO Layer

(Database)

Database

(MySQL/HIVE)

28

Data Collection

The data has been collected from two resources

1. Saint Cloud State University campus library

The original data used in this project were provided by Saint Cloud State University

campus library. This data is available in a MySQL database. It contains three tables: Students,

Majors and CirculationLog. The Students table contains the student’s basic information and each

student can be distinguished by a unique GUID key, UniqueId and there is also a

UniqueStudentId which acts as the primary key. The Majors table has information about students

registered for a particular major. The Majors table is linked to the Students table by a foreign

key, UniqueStudentId and UniqueMajorId act as the primary key for this table. The

CirculationLog table is linked to the Students table by the foreign key, UniqueStudentId and

UniqueCirculationId act as the primary key for this table. For Hadoop analysis, the data in the

CirculationLog table has been regenerated many times to increase its volume, which validates

the big data concept within Hadoop. The following table shows in detail information about the

Students, Majors and CirculationLog tables.

29

Table 2: Students table in MySQL

Field

Type

UniqueStudentId (Primary Key)

INT

UniqueId

VARCHAR

QPP

FLOAT

HS_GPA

DECIMAL

HS_GPAScale

DECIMAL

HS_Rank

INT

HS_GraduationDate

DATETIME

HS_Name

VARCHAR

HS_Code

VARCHAR

HS_City

VARCHAR

HS_State

VARCHAR

HS_Zip

VARCHAR

HS_MnSCURegion

VARCHAR

HS_District

VARCHAR

HS_DistrictCode

VARCHAR

ACTScore

DECIMAL

LibraryUsed

BOOLEAN

30

Table 3: Majors table in MySQL

Field

Type

UniqueMajorId (Primary Key)

INT

UniqueId

VARCHAR

Major

VARCHAR

MajorCode

VARCHAR

MajorProgram

VARCHAR

MajorDepartment

VARCHAR

MajorSchool

VARCHAR

MajorCollege

VARCHAR

FY

VARCHAR

UniqueStudentId (Foreign Key)

INT

31

Table 4: CirculationLog table in MySQL

Field

Type

UniqueCirculationId (Primary Key)

INT

UniqueId

VARCHAR

YearTerm

VARCHAR

TermName

VARCHAR

Date

VARCHAR

DateOfTerm

INT

Hour

VARCHAR

Action

VARCHAR

Id

VARCHAR

Budget

VARCHAR

Profile-id

VARCHAR

Barcode

VARCHAR

Material

VARCHAR

Item-status

VARCHAR

Collection

VARCHAR

Description

VARCHAR

Doc-title

VARCHAR

UniqueStudentId (Foreign Key)

INT

32

Figure 3: Data Model in MySQL

2. Twitter App

This application allows additional Big Data to be downloaded in real-time data from the Twitter

company’s server. “https://apps.twitter.com/” internet site allows for us to create a Twitter App.

Refer Twitter API Overview for details.

In the Application Management window, “Create New App” allows us to make an

application.

Figure 4: Twitter Application Management

33

Once the application is created successfully, On the Application Management screen, the

newly created Twitter Application appears.

Figure 5: Twitter Application

To spread out the newly created Twitter Application one must sail to the “Keys and

Access Tokens” tab, where Consumer Key also called as Application Programming Interface

Key, Consumer Secret or Application Programming Interface Secret Key, Access Token Key

and Access Token Secret Key are the 4 secret keys. These keys allow the Java program to

connect to the Twitter Application to retrieve the data from the Twitter company server.

Figure 6: Twitter Application Key and Access Tokens Management

Refer to Appendix A for the Java source code, which fetches data and writes it in a local

file. To perform analysis with Hadoop, 160GB of data was gathered from the Twitter server.

TwitterFeeds.java, available in Appendix A is used to download raw data, which is in json

format which is consistent with the object oriented programming approach used herein.

Converter.java, available in Appendix A is used to parse the content within the json object and

34

gather the required data to perform analysis with Hadoop. There are four main “objects” within

the API: Tweets, Users, and Entities (see also Entities in Objects), and Places in the feeds.

Tools and Techniques

Apache Sqoop

Sqoop is a tool developed to transfer data between Hadoop databases and relational databases.

“Sqoop is used to import data from a relational database management system (RDBMS) such as

MySQL or Oracle or a mainframe into the Hadoop Distributed File System (HDFS), transform

the data in Hadoop MapReduce, and then export the data back into an RDBMS”. Here, Sqoop is

used to transfer data from a MySQL database to HDFS. Refer Sqoop User Guide (v1.4.6) for

more details.

MySQL

The MySQL software delivers a very fast, multi-threaded, multi-user, and robust SQL

(Structured Query Language) database server. MySQL Server is intended for mission-critical,

heavy-load production systems as well as for embedding into mass-deployed software. It is a

special purpose programming language which was designed for managing data held in relational

databases. This has a wide scope of functions including: data insert, delete, query, update, and

schema creation and modification functions. Here, MySQL acts as traditional database with large

data. Refer A Quick Guide to Using the MySQL APT Repository for more details.

Apache Tomcat

This acts a web server to host the project. Apache Tomcat Servlet/JSP container acts as

an entry point of the documentation bundle. Apache Tomcat is a platform for developing and

deploying web applications and web services. Tomcat is an open source web server developed

35

by Apache Tomcat Foundation released under Apache License. Here, Tomcat acts as a web

application server to support the project. Refer Apache Tomcat 8 for more details.

Java Server Pages Framework

The JSP framework is built on top of a Java Servlet API, it provides tag based templates,

follows the server programming model and it is document centric. The Java code can be

compiled and executed when a request is received in JSP. Here the JSP framework is used to

develop enterprise web applications which allow end users to connect to the Hadoop Hive

database/MySQL database and analyze their performance when dealing with large data sets.

Apache Hive

The Apache Hive data warehouse software facilitates querying and managing large datasets

residing in distributed storage. Hive provides a mechanism to project structure onto this data and

query the data using a SQL-like language called HiveQL. Here Hive acts as data warehouse

which stores HDFS data and HiveQL allows users to perform queries against the HDFS, which

starts MapReduce jobs to fetch the results by implementing a multi-threading approach. Refer

Apache Hive for more details.

Hadoop Key Terms

Namenode: is the centerpiece of the Hadoop file system. Namenode records the structure

of all files in the file system and keeps tracks of where the file data is stored.

Datanode: is the data store in HDFS. A cluster can have more than just a single data node

and can implement more than one data replication across them.

SecondaryNamenode: is a dedicated node in Hadoop, whose function is to take

checkpoints of file system metadata information present in Namenode.

36

JobTracker: is a service within Hadoop that runs Hadoop MapReduce jobs on specific

nodes in the cluster.

TaskTracker: JobTracker creates tasks like Map, Reduce and Shuffle operations for the

TaskTracker to perform.

ResourceManager: Manages the distributed applications running and keeps master lists of

all resource attributes across the Hadoop Cluster.

NodeManager: It is responsible for individual computer nodes in a Hadoop Cluster. It is

YARN’s management node agent.

Shell Commands

sudo: sudo allows the users to execute the commands in superuser or other users, whose

accounts are present in sudoers file. By default, sudo asks for a password to authenticate and

allows the user to execute the commands in superuser or another user form for a specific time.

apt-get: apt stands for Advanced Packing tool. “apt-get” is used to install the new

software packages, upgrade the existing software packages and update the package list index. It

has many advantages over other Linux management tools that are available.

which: the which command in the Linux atmosphere is used to show the full path of the

commands.

wget: wget is the command which allows one to download the files or software without

interaction from the user which means it doesn’t need the user to be logged in and accordingly

the wget command runs in the background. It supports HTTP, HTTPS and FTP protocols.

tar: the tar command is used for archiving files, which means storing or extracting the

files from an archive file which has a .tar extension.

37

mv: the mv command is used to move a file from its source to a directory or to rename a

file from source to destination.

cp: the cp command is used to copy a file from its source to a directory.

nano: the nano command is used to simply open and edit the contents of the file or create

a new file and save the file, even though “vi” and “emacs” does the same work, nano is a simple

command which can be run without any options.

source: the source command is used to evaluate a file or resource such as a tcl script. It

takes the given contents and passes it to the tcl interpreter which returns the command if it exists.

If an error occurs, it simply returns the error.

ln: the ln command is used to make links between files.

chown: chown is used to change the ownership of a file. Only the super user can change the

ownership of any file. fchown, lchown also fit into the same category.

Hardware Environment

Four Virtual Machines using the Ubuntu 14.04.3 Operating System

Table 5: Virtual Machine Details

IP Address

Number of Cores

RAM

CPU Clock Speed

Node Name

10.59.7.90

8

16GB

2200Mz

masternode

10.59.7.91

2

4GB

2200Mz

datanode1

10.59.7.92

2

4GB

2200Mz

datanode2

10.59.7.93

2

4GB

2200Mz

datanode3

38

Software Environment

1. Java 1.7.0_79

2. OpenSSH 6.6.1

3. MySQL Server 5.6

4. Apache Hadoop 2.6.2

5. Apache Hive 1.2.1

6. Apache Sqoop 1.4.6 – For Hadoop 2.x

7. Apache Tomcat7

8. Eclipse IDE for Java EE Developers

39

Chapter 4: Implementation

Installing Java

The following commands will update the package index and install the Java Runtime

Environment. Refer The Java EE 5 Tutorial for more details.

sudo apt-get update

sudo apt-get install openjdk-7-jre

The openjdk-7-jre package contains just the Java Runtime Environment. If one wants to

develop java programs, then the openjdk-7-jdk package would need to be installed.

The following command is used to verify that java installed.

java -version

Installing SSH

There are two components of SSH:

SSH: This command is used to connect to remote client machines, generally invoked by

the client.

SSHD: The daemon which runs on the server, allowing the clients to connect to the

server.

SSH can be installed by using the following command.

sudo apt-get install ssh

To locate the pathname for the SSH or SSHD commands the which command may be

used.

which ssh

/usr/bin/ssh

40

which sshd

/usr/sbin/sshd

Installing and Configuring Apache Tomcat

One begins by downloading the tomcat binary from the tomcat source repository by using

the following command.

wget http://mirror.cc.columbia.edu/pub/software/apache/tomcat/tomcat-

8/v8.0.32/bin/apache-tomcat-8.0.32.tar.gz

Extract the .tar.gz file and move it to the appropriate location.

tar xvzf apache-tomcat-8.0.32.tar.gz

mv apache-tomcat-8.0.32 /opt/tomcat

Adding a tomcat home directory to path.

sudo nano ~/.bashrc

export CATALINA_HOME=/opt/tomcat

sudo source ~/.bashrc

Configuring tomcat user roles by editing tomcat-users.xml.

nano $CATALINA_HOME/conf/tomcat-users.xml

<tomcat-users>

<role rolename=”manager-gui”/>

<role rolename=”admin-gui”/>

<user username=”<username>” password=”<password>”

roles=”manager-gui, admin-gui”/>

</tomcat-users>

41

<tomcat-users>: Users and roles are configured.

<role>: Specifies list of roles.

<user>: User's username, password and roles are assigned under this tag. Users can have

multiple roles defined through a comma delimited list.

Here, the manager-gui role allows the user to access the manager web application

(http://localhost:8080/manager/html) and the admin-gui role allows the user to access the host-

manager web application (http://localhost:8080/host-manager/html).

By default, the tomcat server runs on port 8080, but this can be changed by modifying the

server.xml file in the $CATALINA_HOME/conf folder.

To start the tomcat server:

$CATALINA_HOME/bin/startup.sh

To stop the tomcat server:

$CATALINA_HOME/bin/shutdown.sh

Installing and Configuring MySQL Server 5.6

MySQL installation is made simple by using an ‘apt-get’ command. Open the terminal

window in masternode, and use the following command:

sudo apt-get install mysql-server-5.6

This install the package for the MySQL server, as well as the packages for the client and

for the database common files. During the installation, supply a password for the root user for

your MySQL installation.

Now, Configure MySQL by editing ‘/etc/mysql/my.cnf’. Bind the masternode ip-address

to MySQL server and assign an open port for MySQL to run.

42

bind-address = <masternode ip-address>

port = 3306

The MySQL server is started automatically after installation.

Check the status of the MySQL server with the following command:

service mysql status

Stop the MySQL server with the following command:

service mysql stop

Start the MySQL server with the following command:

service mysql start

Disabling IPv6

Because Hadoop is not supported on IPv6 networks and has been developed and tested on

IPv4 networks, Hadoop needs to be set to only accept IPv4 clients.

Add the following configuration to /etc/sysctl.conf to disable IPv6 networks and restart

the current network.

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

Installing and Configuring Apache Sqoop

Download the Sqoop binary from the Sqoop source repository by using the following

command.

wget http://download.nextag.com/apache/sqoop/1.4.6/sqoop-1.4.6.bin__hadoop-

2.0.4-alpha.tar.gz

43

Extract the .tar.gz file and move it to the appropriate location.

tar xvzf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

mv sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz /home/student/sqoop

Changing the owner and group for the Sqoop installation directory to the Hadoop

dedicated user.

sudo chown -R student:hadoop /home/student/sqoop

Adding the sqoop home directory and sqoop binary directory to path.

sudo nano ~/.bashrc

#SQOOP VARIABLES START

export SQOOP_HOME=<sqoop-home-directory>

export PATH=$PATH:$SQOOP_HOME/bin

#SQOOP VARIABLES END

sudo source ~/.bashrc

Configuring the Sqoop environmental variables by using the sqoop-env-template.sh

template.

mv $SQOOP_HOME/conf/sqoop-env-template.sh $SQOOP_HOME/conf/sqoop-

env.sh

nano $SQOOP_HOME/conf/sqoop-env.sh

export HADOOP_COMMON_HOME=<hadoop-home-directory>

export HADOOP_MAPRED_HOME=<hadoop-home-directory>

Adding the mysql-connector-java.jar to Sqoop libraries.

sudo apt-get install libmysql-java

44

ln -s /usr/share/java/mysql-connector-java.jar $SQOOP_HOME/lib/mysql-

connector-java.jar

The following command is used to verify the Sqoop version.

sqoop-version

Installing and Configuring Apache Hive

Download the Hive binary from the Hive source repository by using the following

command.

wget http://ftp.wayne.edu/apache/hive/stable/apache-hive-1.2.1-bin.tar.gz

Extract the .tar.gz file and move it to the appropriate location.

tar xvzf apache-hive-1.2.1-bin.tar.gz

mv apache-hive-1.2.1-bin.tar.gz /home/student/hive

Changing the owner and group for the Hive installation directory to the Hadoop

dedicated user.

sudo chown -R student:hadoop /home/student/hive

Adding the hive home directory and the hive binary directory to the path.

sudo nano ~/.bashrc

#Hive VARIABLES START

export HIVE_HOME=<hive-home-directory>

export PATH=$PATH:$HIVE_HOME/bin

#Hive VARIABLES END

sudo source ~/.bashrc

45

Configuring the hive environmental variables by adding HADOOP_HOME to hive-

config.sh.

nano $HIVE_HOME/bin/hive-config.sh

export HADOOP_HOME=<hadoop-home-directory>

Adding the mysql-connector-java.jar to the Hive libraries.

sudo apt-get install libmysql-java

ln -s /usr/share/java/mysql-connector-java.jar $HIVE_HOME/lib/mysql-

connector-java.jar

Now one can configure the Hive metastore service, where the Metastore service provides

the interface to Hive and the Metastore database stores the mappings to the data and data

definitions. It is important to edit the hive-site.xml file within the conf directory of Hive, so that

the MySQL database acts as the Metastore database for Hive.

cp $Hive_HOME/conf/hive-default.xml.template $Hive_HOME/conf/hive-

site.xml

nano $Hive_HOME/conf/hive-site.xml

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://10.59.7.51/metastore_db?createDatabase

IfNotExist=true</value>

<description>Metadata is stored in a MySQL server</description>

</property>

<property>

46

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>MySQL JDBC driver class</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hiveuser</value>

<description>Username to connect to MySQL server</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hivepassword</value>

<description>Password to connect to MySQL server</description>

</property>

Configure Metastore in MySQL by creating the metastore_db database and upgrade the

tables by using the hive schema for the MySQL database.

mysql -u <username> -p

Enter password: <password>

mysql> create database metastore_db;

mysql> use metastore_db;

mysql> SOURCE $Hive_HOME/scripts/metastore/upgrade/mysql/hive-schema-

0.14.0.mysql.sql;

47

mysql> CREATE USER 'hiveuser'@'%' IDENTIFIED BY 'hivepassword';

mysql> GRANT all on *.* to 'hiveuser'@<machine-ip> identified by

'hivepassword';

mysql> flush privileges;

mysql> exit;

The following command set is used to verify the Hive installation

hive

Installing and Configuring Apache Hadoop

Download the Hadoop binary from the Hadoop source repository by using the following

command in all the virtual machines. Refer HadoopIPv6 for more details.

wget http://www-us.apache.org/dist/hadoop/common/hadoop-2.6.1/hadoop-

2.6.1.tar.gz

Extract the .tar.gz file.

tar xvzf hadoop-2.6.1.tar.gz

Changing owner and group for the Hadoop installation directory to the Hadoop dedicated

user.

sudo chown -R student:hadoop /home/student/hadoop

When configuring Hadoop be aware that it involves numerous files.

1. ~/.bashrc

#Hadoop variables start

export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64

export HADOOP_INSTALL=<Hadoop home directory>

48

export HADOOP_HOME=$HADOOP_INSTALL

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="$HADOOP_OPTS -

Djava.library.path=$HADOOP_HOME/lib/native"

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

#Hadoop variables end

2. hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/student/hadoop-2.6.1/hadoop_store/hdfs/namenode</value>

</property>

49

<property>

<name>dfs.namenode.http-address</name>

<value>masternode:51070</value>

</property>

</configuration>

3. yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value> org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>masternode:8026</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>masternode:8031</value>

50

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>masternode:8051</value>

</property>

</configuration>

4. mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapred.local.dir</name>

<value>file:/home/student/hadoop-2.6.1/hadoop_store/mapred/local</value>

<description>Determines where temporary MapReduce data is written. It also may be

a list of directories.</description>

</property>

<property>

<name>mapred.map.tasks</name>

<value>30</value>

51

<description>As a rule of thumb, use 10x the number of slaves (i.e., number of

tasktrackers).</description>

</property>

<property>

<name>mapred.reduce.tasks</name>

<value>6</value>

<description>As a rule of thumb, use 2x the number of slave processors (i.e., number

of tasktrackers).</description>

</property>

</configuration>

5. core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/student/hadoop-2.6.1/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<property>

<name>fs.default.name</name>

<value>hdfs://masternode:54310</value>

<description>The name of the default file system. A URI whose

scheme and authority determine the FileSystem implementation. The

52

uri's scheme determines the config property (fs.SCHEME.impl) naming

the FileSystem implementation class. The uri's authority is used to

determine the host, port, etc. for a filesystem.</description>

</property>

</configuration>

6. masters

student@masternode

7. slaves

student@datanode1

student@datanode2

student@datanode3

Format the Hadoop namenode with the following command

hadoop namenode –format

To start Hadoop daemons

start-all.sh

To stop Hadoop daemons

stop-all.sh

Enabling Secure Connection using SSH

Now keys can be created for the encryption process using the Digital Signature

Algorithm and Installing the authorized public key in all the nodes, so that machines can be

connected by using SSH.

53

SSH keys provide a secure way to login to a virtual server. ssh-keygen provides a key

pair which generally consists of a public key and a private key. The Public Key is placed on a

server and the Private Key is placed on client, which allows server/client communications in a

secure way. The following command is used to generate keygen, and then copied to different

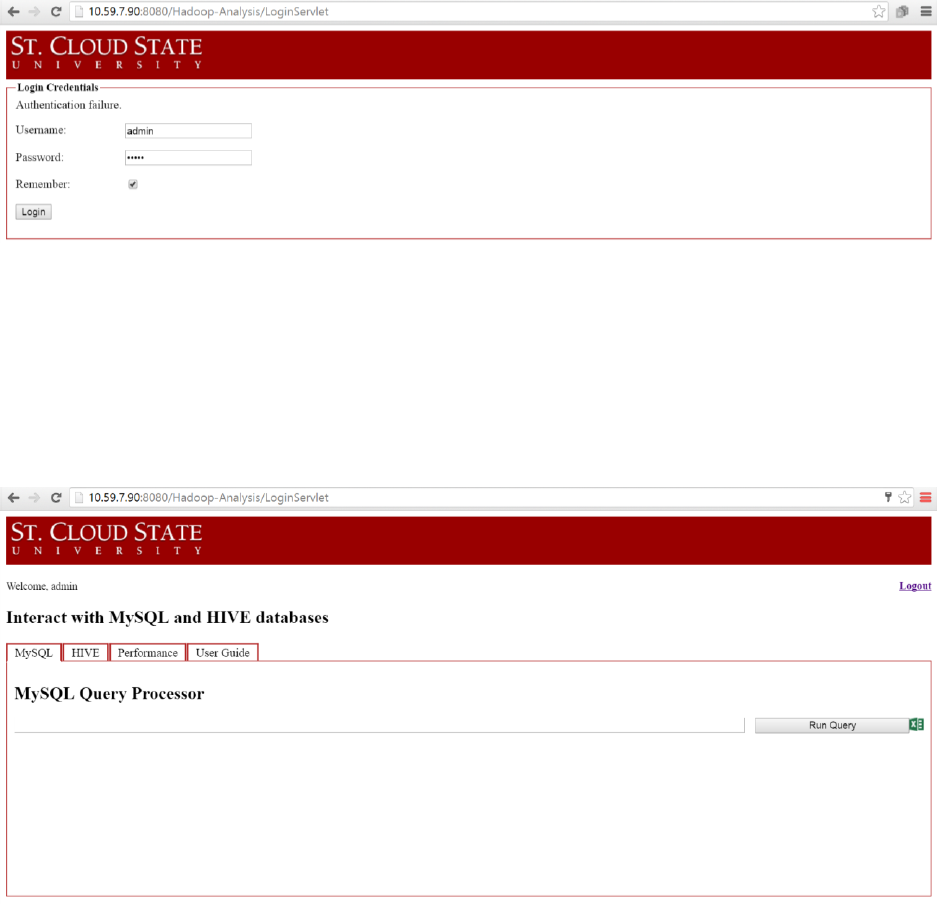

clients to establish secure communication. The following code is an example for creating keygen

in masternode and then copying it to different datanodes to ensure security between/among them.

student@masternode:~$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

student@masternode:~$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

student@masternode:~$ ssh-copy-id -i ~/.ssh/id_dsa.pub student@datanode1

student@masternode:~$ ssh-copy-id -i ~/.ssh/id_dsa.pub student@datanode2

student@masternode:~$ ssh-copy-id -i ~/.ssh/id_dsa.pub student@datanode3

Configuring Hostname

Hostnames are user readable nicknames that correspond to the IP address of a device

connected to the network.

Next configuration of the hostname and mapping the IP addresses to respective hostname

in the masternode machine can take place. Similarly, we need to configure the hostname and

map IP addresses to the hostnames in all the 3 datanodes.

sudo nano /etc/hostname

masternode

sudo nano /etc/hosts

10.59.7.90 masternode localhost

10.59.7.91 datanode1

54

10.59.7.92 datanode2

10.59.7.93 datanode3

Loading Twitter Data from Text file to MySQL

The following command is used to load data from a file structure to a MySQL database

by using MySQL connection information and the database name as parameters to the command.

student@masternode:~$ mysqlimport --user=root --password=root --fields-terminated-

by='|' --lines-terminated-by='\n' --local hadoopanalysis TweetAnalysis

Importing Data from MySQL to HDFS

The following commands are used to import data from the MySQL database to HDFS file

system using Apache Sqoop.

student@masternode:~$ sqoop import --connect

jdbc:mysql://10.59.7.90:3306/hadoopanalysis --table TwitterAnalysis --username

hiveuser --password hivepassword

student@masternode:~$ sqoop import --connect

jdbc:mysql://10.59.7.90:3306/hadoopanalysis --table CirculationLog --username hiveuser

--password hivepassword

student@masternode:~$ sqoop import --connect

jdbc:mysql://10.59.7.90:3306/hadoopanalysis --table Majors --username hiveuser --

password hivepassword

student@masternode:~$ sqoop import --connect jdbc:mysql://10.59.7.90:3306/hadoopanalysis --

table Students --username hiveuser --password hivepassword

55

Creating Tables in Hive

The following commands are used to create tables in Hive.

hive> create table TwitterAnalysis(UniqueID BIGINT,TweetID BIGINT, Time_stamp

VARCHAR(255), Tweet VARCHAR(255),FavouriteCount BIGINT, ReTweetCount

BIGINT, lang VARCHAR(255), UserID BIGINT, UserName VARCHAR(255),

ScreenName VARCHAR(255),Location VARCHAR(255), FollowersCount BIGINT,

FriendsCount BIGINT, Statuses BIGINT, Timezone VARCHAR(255))COMMENT

'TwitterAnalysis' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LINES

TERMINATED BY '\n';

hive> create table CirculationLog (UniqueCirculationId BIGINT, UniqueId varchar(40),

YearTerm varchar(5), TermName varchar(40), CirculationDate varchar(50), DayOfTerm

INT, hour varchar(50), action varchar(50), id varchar(50), budget varchar(50), profile_id

varchar(50), barcode varchar(50), material varchar(50), item_status varchar(50),

collection varchar(100), call_no varchar(50), description varchar(100), doc_title

varchar(500), UniqueStudentId BIGINT, CallLabel varchar(40))COMMENT

'CirculationLog' ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LINES

TERMINATED BY '\n';

hive> create table Majors (UniqueMajorId BIGINT, UniqueId VARCHAR(40), Major

VARCHAR(19), MajorCode VARCHAR(4), MajorProgram VARCHAR(100),

MajorDepartment VARCHAR(100), MajorSchool VARCHAR(100), MajorCollege

56

VARCHAR(100), FY VARCHAR(4), UniqueStudentId BIGINT)COMMENT 'Majors'

ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LINES TERMINATED

BY '\n';

hive> create table Students (UniqueStudentId BIGINT, UniqueId VARCHAR(40),QPP

FLOAT,HS_GPA DOUBLE, HS_GPAScale DOUBLE, HS_Rank

INT,HS_GraduationDate TIMESTAMP,HS_Name VARCHAR(100), HS_Code

VARCHAR(8), HS_City VARCHAR(40), HS_State VARCHAR(2),HS_Zip

VARCHAR(5), HS_MnSCURegion VARCHAR(2), HS_District VARCHAR(50),

HS_DistrictCode VARCHAR(8), ACTScore DOUBLE, LibraryUsed BOOLEAN)

COMMENT 'Students' ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

LINES TERMINATED BY '\n';

57

Table 6: Students table in Hive

Field

Type

UniqueStudentId

BIGINT

UniqueId

VARCHAR

QPP

FLOAT

HS_GPA

DOUBLE

HS_GPAScale

DOUBLE

HS_Rank

INT

HS_GraduationDate

TIMESTAMP

HS_Name

VARCHAR

HS_Code

VARCHAR

HS_City

VARCHAR

HS_State

VARCHAR

HS_Zip

VARCHAR

HS_MnSCURegion

VARCHAR

HS_District

VARCHAR

HS_DistrictCode

VARCHAR

ACTScore

DOUBLE

LibraryUsed

BOOLEAN

58

Table 7: Majors table in Hive

Field

Type

UniqueMajorId (Primary Key)

INT

UniqueId

VARCHAR

Major

VARCHAR

MajorCode

VARCHAR

MajorProgram

VARCHAR

MajorDepartment

VARCHAR

MajorSchool

VARCHAR

MajorCollege

VARCHAR

FY

VARCHAR

UniqueStudentId

INT

59

Table 8: CirculationLog table in Hive

Field

Type

Uniquecirculationid

BIGINT

UniqueId

VARCHAR

YearTerm

VARCHAR

TermName

VARCHAR

Date

VARCHAR

DateOfTerm

INT

Hour

VARCHAR

Action

VARCHAR

Id

VARCHAR

Budget

VARCHAR

Profile-id

VARCHAR

Barcode

VARCHAR

Material

VARCHAR

Item-status

VARCHAR

Collection

VARCHAR

Description

VARCHAR

Doc-title

VARCHAR

UniqueStudentId

INT

60

Table 9: TwitterAnalysis table in MySQL and Hive

Field

Type

UniqueID

BIGINT

TweetID

BIGINT

CreatedAt

VARCHAR

Tweet

VARCHAR

FavouriteCount

BIGINT

ReTweetCount

BIGINT

Lang

VARCHAR

UserID

BIGINT

UserName

VARCHAR

ScreenName

VARCHAR

Location

VARCHAR

FollowersCount

BIGINT

FriendsCount

BIGINT

Statuses

BIGINT

Timezone

VARCHAR

61

Loading Data from HDFS to Hive Tables

The following command is used to load data from an HDFS file path to Hive database

tables.

hive> load data inpath '/user/student/Students' into table Students;

hive> load data inpath '/user/student/Majors' into table Majors;

hive> load data inpath '/user/student/CirculationLog' into table CirculationLog;

hive> load data inpath '/user/student/TwitterAnalysis' into table TwitterAnalysis;

62

Chapter 5: Analysis and Results

Access to Hadoop Cluster

To visit the Hadoop Cluster web, use the following link.

http://10.59.7.90:8088/cluster, which was deployed in Business Computing Research

Laboratory of Saint Cloud State University. The below screen refers to All Applications

available in Hadoop Cluster.

Figure 7: Hadoop Cluster – All Applications

/cluster/nodes points to the Active nodes of Hadoop Cluster, the screen below refers to all

3 active data nodes in the Hadoop Cluster.

Figure 8: Hadoop Cluster – Active Nodes of the cluster

63

/cluster/nodes/lost path takes to the Lost nodes of Hadoop Cluster.

Figure 9: Hadoop Cluster – Lost Nodes of the cluster

/cluster/nodes/unhealthy path takes to the Unhealthy nodes of Hadoop Cluster.

Figure 10: Hadoop Cluster – Unhealthy Nodes of the cluster

64

/cluster/nodes/decommissioned path takes to the Decommissioned nodes of Hadoop

Cluster.

Figure 11: Hadoop Cluster – Decommissioned Nodes of the cluster

/cluster/nodes/rebooted path takes to the Rebooted nodes of Hadoop Cluster.

Figure 12: Hadoop Cluster – Rebooted Nodes of the cluster

65